Marketers often don’t know the source(s) of their direct traffic, which leaves them somewhat powerless. Without knowing where the traffic comes from, they can’t determine how to attract more or whether it’s meaningful.

It’s impossible to parse out all referring sources of your direct traffic (and it’s not recommended). But it is worth raising your knowledge around this often mysterious topic. Use this guide to learn where direct traffic comes from, clean up your source tracking, and keep your content analytics actionable.

What is direct traffic?

Direct traffic, or “dark traffic,” doesn’t have a defined source or referring site. In other words, it’s traffic to your site that’s missing a referrer. The term “direct traffic” is best known from Google Analytics. Its polar opposite is “organic traffic,” which has a referral source and a keyword that generated the traffic.

Content analytics platforms may label traffic as direct because of user behavior, such as reaching a site by typing a URL, or because tracking technology is absent.

Direct traffic can make up a significant portion of a website’s traffic. Because direct traffic is so common, it’s built a reputation for being the “catch-all” traffic category on analytics platforms.

Marketers’ knee-jerk assumption is that direct website traffic should ultimately be zero. Make no mistake: Sorting through all direct traffic to find every referral source is impossible.

But you can do your best to attribute at least some of your direct traffic. Content analytics platforms are especially helpful with this investigation process. You can start by learning about the main types of direct traffic sources.

6 surprising sources of direct website traffic (and how to better track them)

Many content marketers assume direct traffic comes from users typing addresses in a URL bar. They think people need to be reaching sites “directly” for it to be direct traffic, pointing to a possibly arbitrary classification in Google Analytics.

Of course, that is one source of direct traffic. But there are other ways it can be generated.

Here, we’ll detail those sources and how you can minimize direct traffic from them.

1. Bookmarks and manual website entry

As long as bookmark and URL bars exist on browsers, sites will likely have direct traffic. Bookmarking and manual website entry lead to direct traffic because no referral site sent users. Without that traceable referral source, analytics platforms mark the traffic as direct.

Bookmarking may generate direct traffic, but it’s largely a positive sign for sites. Users love your site so much that they want to reach it with just one click.

The same goes for manual website entry. Your brand is so memorable that users don’t need search engines to get there. Considering these factors, you have no reason to minimize bookmarking and manual website entry for the sake of cutting direct traffic.

2. Dark social

Dark social includes sharing links via email, texting, or direct social messaging.

Content analytics platforms can’t track this link sharing because it’s private, not public.

Facebook used to have a feature on the Timeline that allowed users to quickly share links to articles in private messages, but they have since removed it. They also decoupled messaging from the Facebook mobile app itself, forcing users to download a separate app to message privately. These changes minimize the potential for direct traffic and improve tracking for advertisers.

Finding sources for dark social traffic is particularly difficult—you must rigorously track social and email campaigns through UTM parameters. Encourage followers to share links publicly, so you have less traffic from dark social sources.

3. Broken or missing UTM parameters and referrers on links

UTM parameters attribute traffic to a specific campaign or referral source. In the URL below, everything highlighted is UTM parameters used for attribution. UTM parameters facilitate improved tracking, but it’s difficult to attribute traffic if they’re broken or absent.

Every campaign should use UTM parameters, but sometimes marketers skip creating one if they’re in a rush. Don’t make this mistake—UTM parameters are critical for tracking the sources of your campaign traffic and determining ROI.

To monitor your traffic, check out our best practices for campaign tracking via UTMs. Beyond that, we have some general pointers for campaign tracking:

- Set a to-do to create UTM parameters for a campaign landing page (potentially as part of a broader project checklist).

- Regularly audit your UTM parameters.

- Avoid spaces in UTMs, as they can cause your tracking to malfunction.

To be a data-driven marketer, you can’t neglect these steps (especially for one-time campaigns). Build a habit of adding UTM parameters before shipping a campaign so you’re set to track your traffic sources.

4. Broken or improper redirects

Even if you have UTM tracking set up on links, you still may not be able to trace back traffic sources’ referral sites if the page(s) involved contain these common issues with redirects:

- Broken redirect chains: Chains of redirects that are circular and point back to the beginning page or include incorrect or nonexistent URLs.

- Meta refreshes: Refreshes of pages done on the website level vs. the server level. These are associated with poor user experience and decreased tracking ability as they wipe referral data.

- JavaScript-based redirects: Typical 301 redirects are always preferred over JavaScript-based redirects, which frequently wipe referral data while redirecting.

With too many redirects, Google Analytics often can’t trace the traffic back to its original source. Similarly, with so many changes to the destination, the UTM parameters and referrer information might be stripped out.

Work with your development team to minimize direct traffic from redirects. Can you streamline your redirect protocol? If you recently switched to another domain, can you discontinue redirects from the old URL (assuming it’s not receiving significant traffic)? To read more on how to minimize redirects, check out this guide from the ahrefs blog.

5. Redirects from HTTPS > HTTP

Referral data doesn’t transfer when a user clicks a link on an HTTPS site to visit an HTTP one. This can happen if your HTTPS certificate lapses or if a referral source recently switched its protocol to HTTPS.

If you use the HTTP protocol and most traffic is direct, consider switching your website to HTTPS. Your traffic will be easier to track, and you’ll be comfortable knowing your site and users are secure. With proper traffic tracking and improved security, the HTTPS protocol is a win-win for your site.

6. Links to external or offline documents

Content analytics platforms can’t trace traffic from external links—including links shared in Microsoft Word files, slidedecks, whitepapers, PDFs, and other offline documents. Once a resource is downloaded and accessed offline (when JavaScript isn’t involved), the links it includes can’t be tracked.

Keep as many of your resources online, so you can track traffic on these pages. Instead of sharing a downloadable presentation, you might upload the slides to a blog post. If you provide offline resources, set up UTM codes for every link in them.

How to attribute your direct traffic with content analytics

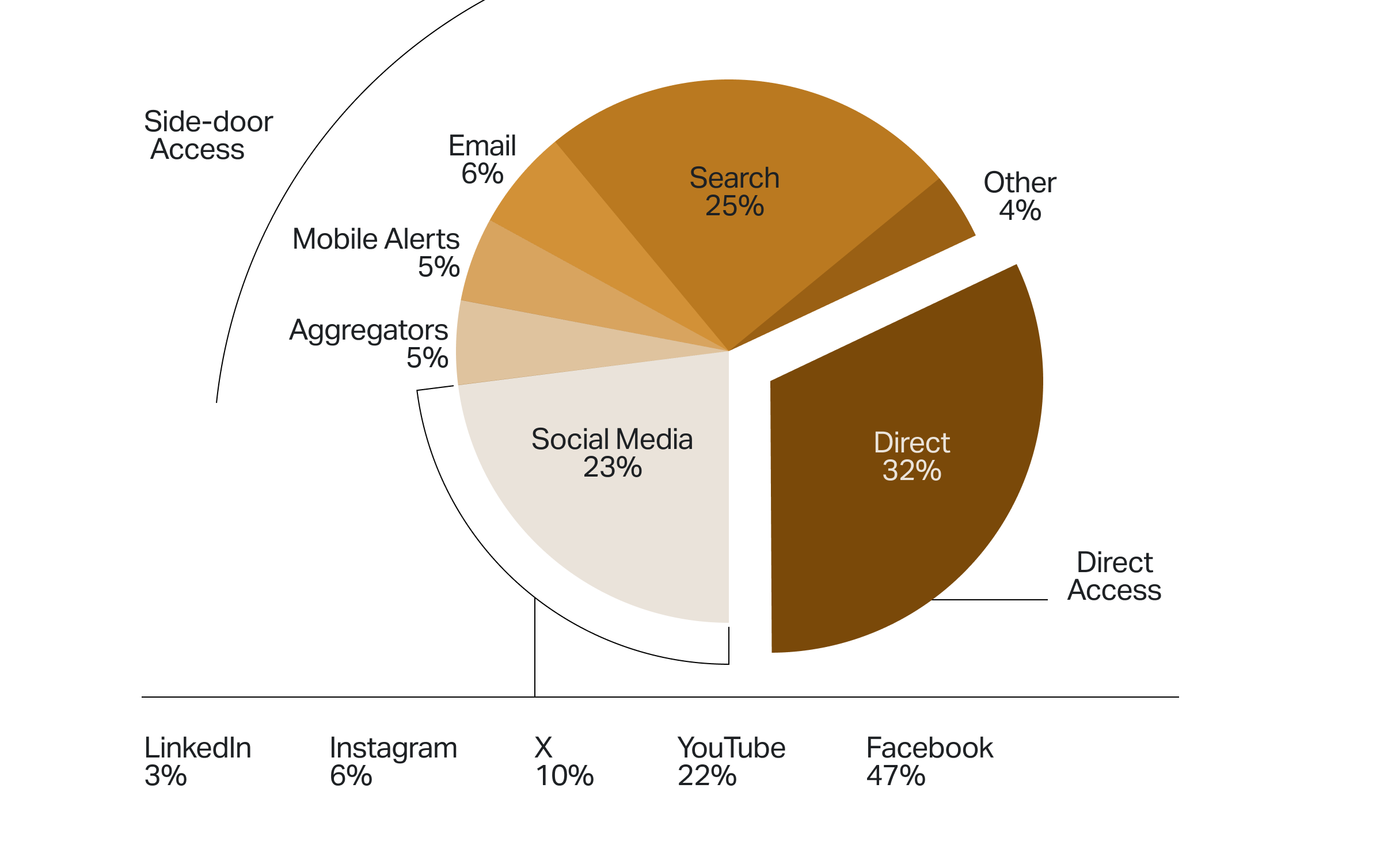

Despite its popularity, Google Analytics offers weak direct traffic reporting. The tool can’t clear up common traffic questions for users, and the data it provides isn’t terribly actionable.That said, content analytics tools can help you determine where your site visitors are coming from. Parse.ly can unlock additional traffic information from social platforms. We visualize and sort referrer data into categories so users can make sense of their traffic sources.

Your analytics are useless if they’re not easily understandable and shareable. That’s why the Parse.ly Dashboard breaks down traffic sources and pageviews from each source, grouping referrers by channel and other key content performance data like engaged time—all on one page.

With this visualization, your referrers are contextualized by the metadata of your content (sections, tags, authors, word count, media type, etc). This is a significant edge Parse.ly users have, understanding their content performance at a glance and the ability to take action based on the data quickly.

And what about search bots and AI crawlers?

The rise of rogue direct traffic from bots is a tricky matter. It’s an arms race between bot development and detection and can cause discrepancies between analytics products.

No analytics platform will say, “Here’s exactly how we block bots,” so there are bound to be differences in how Parse.ly, GA, Adobe, and others mitigate bot traffic. That said, we always recommend that customers block bots from reaching their site in the first place—so they never trigger analytics code.

One way to fight against bot traffic is at the website host level. For example, the WordPress VIP CMS platform includes a Deny Requests Based on User Agent feature. This blocks requests from specific user agents, such as AI crawlers and unwanted bots, before they reach your application.

By better controlling the traffic hitting your site, you can ensure that unwanted traffic doesn’t impact your app’s performance—or inflate your direct traffic numbers.

Clear up your direct traffic questions with Parse.ly

Curious about what insights Parse.ly can uncover about your website’s direct traffic?

Author

Greg Ogarrio, Content Marketer—WordPress VIP