robots.txt

A robots.txt file is automatically generated for all WordPress sites. Though the file is not directly accessible, the contents of robots.txt can be modified with WordPress actions and filters.

Default settings

If a WordPress single site—or the main site (ID 1) of a WordPress multisite—is still mapped to a convenience domain, the output of robots.txt is hard-coded and cannot be modified.

This hard-coded robots.txt will return an x-robots-tag: noindex, nofollow header for all requests to any URLs on that environment. These settings are intended to prevent search engines from indexing content hosted on non-production sites, or unlaunched production sites.

The hard-coded output of robots.txt for an environment accessible only by a convenience domain is:

User-agent: *

Disallow: /Environments with an assigned primary domain

After a custom domain is assigned as the primary domain for an environment (production or non-production), the output for robots.txt will automatically update with settings that make all frontend content accessible for indexing by search engines.

The hard-coded output of robots.txt for an environment with a custom domain assigned as the primary domain is:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.phpIf the content of a WordPress environment with an assigned primary domain should not be accessible for indexing by search engines, the output of robots.txt can be programmatically modified. In addition, a setting in a site’s WordPress Admin dashboard can be enabled to discourage search engines.

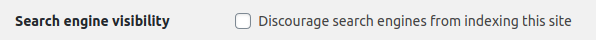

- In the WP Admin, select Settings -> Reading from the lefthand navigation menu.

- Toggle the setting labeled “Search engine visibility” and enable the option “Discourage search engines from indexing this site”.

- Select the button labeled “Save Changes” to save the setting.

Modify the robots.txt file

Prerequisite

The output of a site’s robots.txt can only be modified if the site’s environment (production or non-production) has a custom domain set as the primary domain.

To modify robots.txt for a site, hook into the do_robotstxt action or filter the output by hooking into the robots_txt filter.

In most cases, custom code to override the robots.txt file can be added to a theme’s functions.php file. For sites that require more tailored search engine crawling directives, custom code can be selectively added and enabled with a site-specific plugin.

Action

In this code example, the do_robotstxt action is used to mark a specific directory as nofollow for all User Agents:

function my_robotstxt_disallow_directory() {

echo 'User-agent: *' . PHP_EOL;

echo 'Disallow: /path/to/your/directory/' . PHP_EOL;

}

add_action( 'do_robotstxt', 'my_robotstxt_disallow_directory' );Filter

In this code example, the output of robots.txt is modified using the robots_txt filter:

function my_robots_txt_disallow_private_directory( $output, $public ) {

$output .= 'Disallow: /wp-admin/' . PHP_EOL;

$output .= 'Allow: /wp-admin/admin-ajax.php' . PHP_EOL;

// Add custom rules here

$output .= 'Disallow: /private-directory/' . PHP_EOL;

$output .= 'Allow: /public-directory/' . PHP_EOL;

return $output;

}

add_filter( 'robots_txt', 'my_robots_txt_disallow_private_directory', 10, 2 );Disallow AI crawlers

Use the robots_txt filter to configure a site’s robots.txt to disallow artificial intelligence (AI) crawlers from crawling a site.

Note

Additional restriction to a site’s content can be put in place for AI crawlers with the VIP_Request_Block utility class.

In this code example, a site’s robots.txt is configured to disallow requests from User-Agents of well-known AI crawlers (e.g. OpenAI’s GPTBot).

Only 4 AI crawlers are included in this code example, though far more exist. Customers should research which AI crawler User-Agents should be disallowed for their site and include them in a modified version of this code example.

function my_robots_txt_block_ai_crawlers( $output, $public ) {

$output .= '

## OpenAI GPTBot crawler (https://platform.openai.com/docs/gptbot)

User-agent: GPTbot

Disallow: /

## OpenAI ChatGPT service (https://platform.openai.com/docs/plugins/bot)

User-agent: ChatGPT-User

Disallow: /

## Common Crawl crawler (https://commoncrawl.org/faq)

User-agent: CCBot

Disallow: /

## Google Bard / Gemini crawler (https://developers.google.com/search/docs/crawling-indexing/overview-google-crawlers)

User-agent: Google-Extended

Disallow: /

';

return $output;

}

add_filter( 'robots_txt', 'my_robots_txt_block_ai_crawlers', 10, 2 );Test modifications

Modifications made to robots.txt should be tested on a non-production environment first. If the non-production environment is in an unlaunched state with a convenience domain, the environment’s hard-coded robots.txt must be temporarily overridden to allow for testing.

Add the following code to override the environment’s hard-coded robots.txt:

remove_filter( 'robots_txt', 'Automattic\VIP\Core\Privacy\vip_convenience_domain_robots_txt' );Caching

The /robots.txt file is cached for long periods of time by the page cache. After changes are made to /robots.txt, the cached version can be purged by using the VIP Dashboard or VIP-CLI.

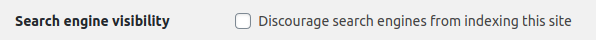

The cached version of /robots.txt can also be cleared from within the WordPress Admin dashboard.

- In the WP Admin, select Settings -> Reading from the lefthand navigation menu.

- Toggle the setting of Search engine visibility, and select the button labeled “Save Changes” each time the setting is changed.

Last updated: April 16, 2024